As I evolved in my career to take on the role of a Node.js specialist — capable of identifying bottlenecks and addressing performance issues — I grew increasingly unhappy about the performance and size of the server-side stacks provided by SSR frameworks. Especially the fact that most frameworks aren't running on Fastify. I've written at length on the merits of adopting Fastify for Node.js servers.

It's now been over a year since I started working on fastify-vite, a plugin for integrating Vite application bundling into Fastify applications.

If you haven't heard about Vite, Fireship does a good job explaining it in 100 seconds. In early 2021, as Vite was approaching its 2.0 release, I realized a lot of the cruft I was used to could be replaced by Vite plugins, in a much smaller and cleaner setup. That's what fastify-vite is all about.

Yesterday I've pushed the first public beta release of fastify-vite v3 to npm, with a complete refactoring and a shift towards providing architectural primitives rather than veering into specific advanced features for different client frameworks.

⁂

To demonstrate fastify-vite's power and flexibility, let's build a mini Next.js with it. I say mini because Next.js is a huge piece of software.

If you don't have time to read all of this now, you can jump straight into the code:

At one point installing Next.js got you 877 packages in node_modules. This was in late 2020, at the 10.0.0 release. The latest release now gets you only 14 packages installed through npm, but if you look at node_modules/next, you quickly realize they essentially opted out of npm package resolution for a faster install, with all dependencies being prepacked together with the framework's distribution bundle. But the massive complexity and dependency tree is still there.

You can integrate any Vite-bundled application with Fastify, with any framework that supports SSR. As a long-time Vue developer, I'm not particularly fond of React. In fact, as of late I've experimenting a lot with Solid, which I think is well headed towards overtaking React's popularity in the coming years.

But as I was working on fastify-vite, writing examples for both React and Vue using the same idioms was an excellent way to test its flexibility.

For the mini Next.js experiment, I'm going to focus on the two most essential features which I think provide amazing developer experience in Next.js:

- the

pages/folder, which automates routing based on its hierarchical structure; - and

getServerSideProps(), which automates isomorphic data fetching.

Automating route registration with the pages/ folder

I'm going to start from react-hydration, a fastify-vite example that implements SSR for a React application and takes care of hydrating server-side populated Jotai state on the client. Here's how that example is currently set up:

├─ client

│ ├─ base.css

│ ├─ base.jsx

│ ├─ index.html

│ ├─ index.js

│ ├─ mount.js

│ ├─ state.js

│ ├─ routes.js

│ └─ views

│ ├─ index.jsx

│ └─ other.jsx

├─ renderer.js

├─ server.js

└─ vite.config.js

If you don't like using the JSX extension for React components, check out this Twitter thread where Evan You explains why that's necessary for Vite.

One of the limitations of this example is that you have to manually specify your routes in routes.js. For simple projects, it's nice to be able to just drop files in a pages/ folder. It's also nice to be able to specify dynamic routes using the [param] convention adopted by Next.js. For larger projects, you might want to still have all your routes defined in one place, as is the case for the react-hydration example. With fastify-vite, you can roll out your own routing mechanics.

So let's get started — first I renamed the views/ directory as pages/ and added a series of individual nested routes, index pages and dynamic pages. I also removed state.js (and the Jotai dependency) because it'll be using getServerSideProps() later to populate data from the server.

├─ client

│ ├─ base.css

│ ├─ base.jsx

│ ├─ index.html

│ ├─ index.js

│ ├─ mount.js

│ ├─ next.jsx

- │ ├─ views

+ │ ├─ pages

+ │ │ ├─ index.jsx

+ │ │ ├─ items

+ │ │ │ ├─ [id].jsx

+ │ │ │ ├─ index.jsx

+ │ │ │ └─ nested

+ │ │ │ ├─ [id].jsx

+ │ │ │ └─ index.jsx

+ │ │ └── other.jsx

- │ ├── state.js

│ └── routes.js

├─ renderer.js

├─ server.js

└─ vite.config.js

Vite includes a handy utility to import several files at once. We can use import.meta.glob() to load all files matching a glob pattern.

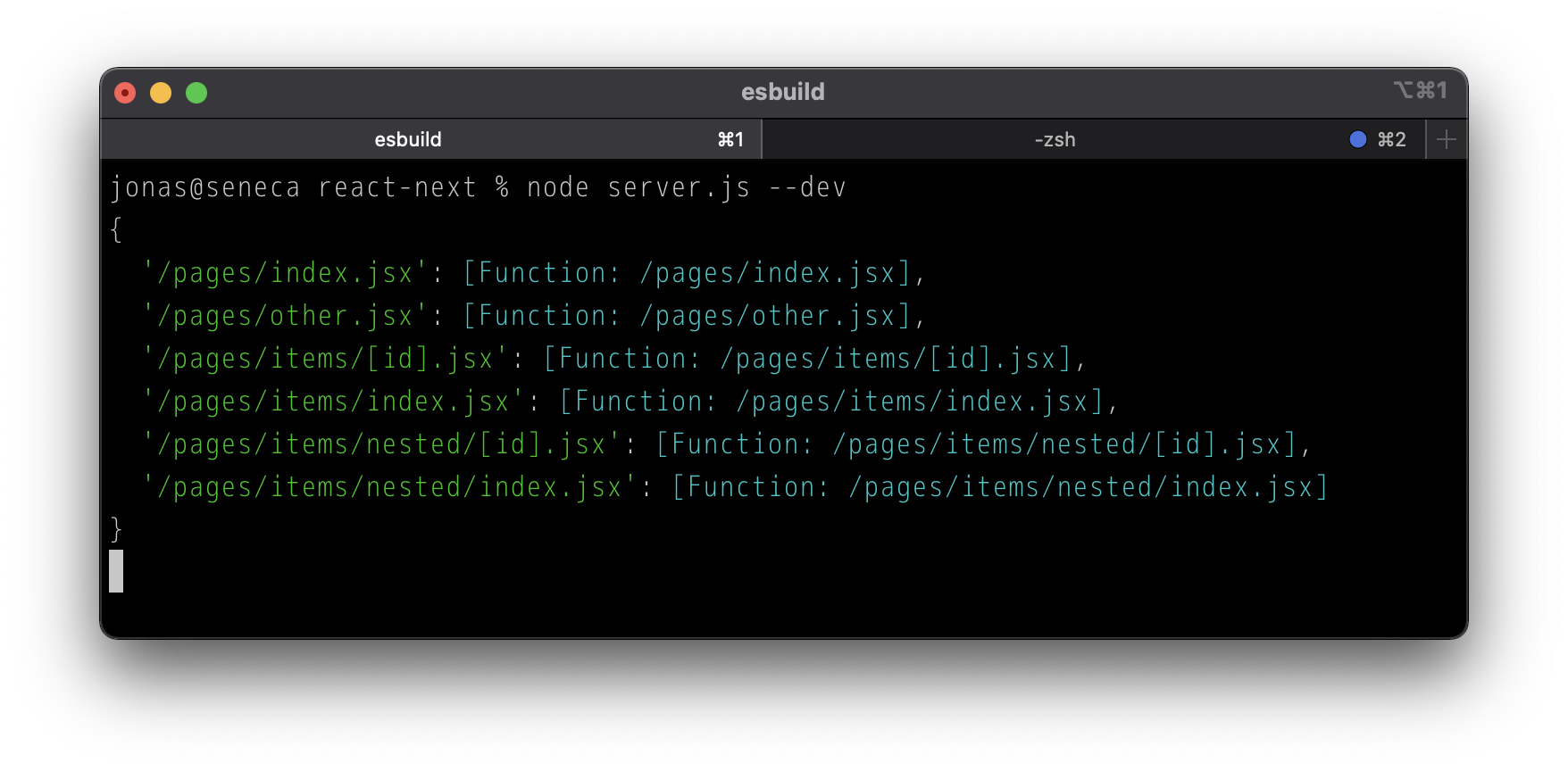

For example, import.meta.glob('/pages/**/*.jsx') gives:

That gives us a path:importer map, that is, a key for every file and an importer function, which is typically what you want for page-level code splitting. But for the purposes of this experiment, we want to be able to fully import each page component file so we also get pick up a getServerSideProps() function export.

We can still have page-level code splitting, but it would require a bit more code to get it working and that goes a little beyond the scope of this article.

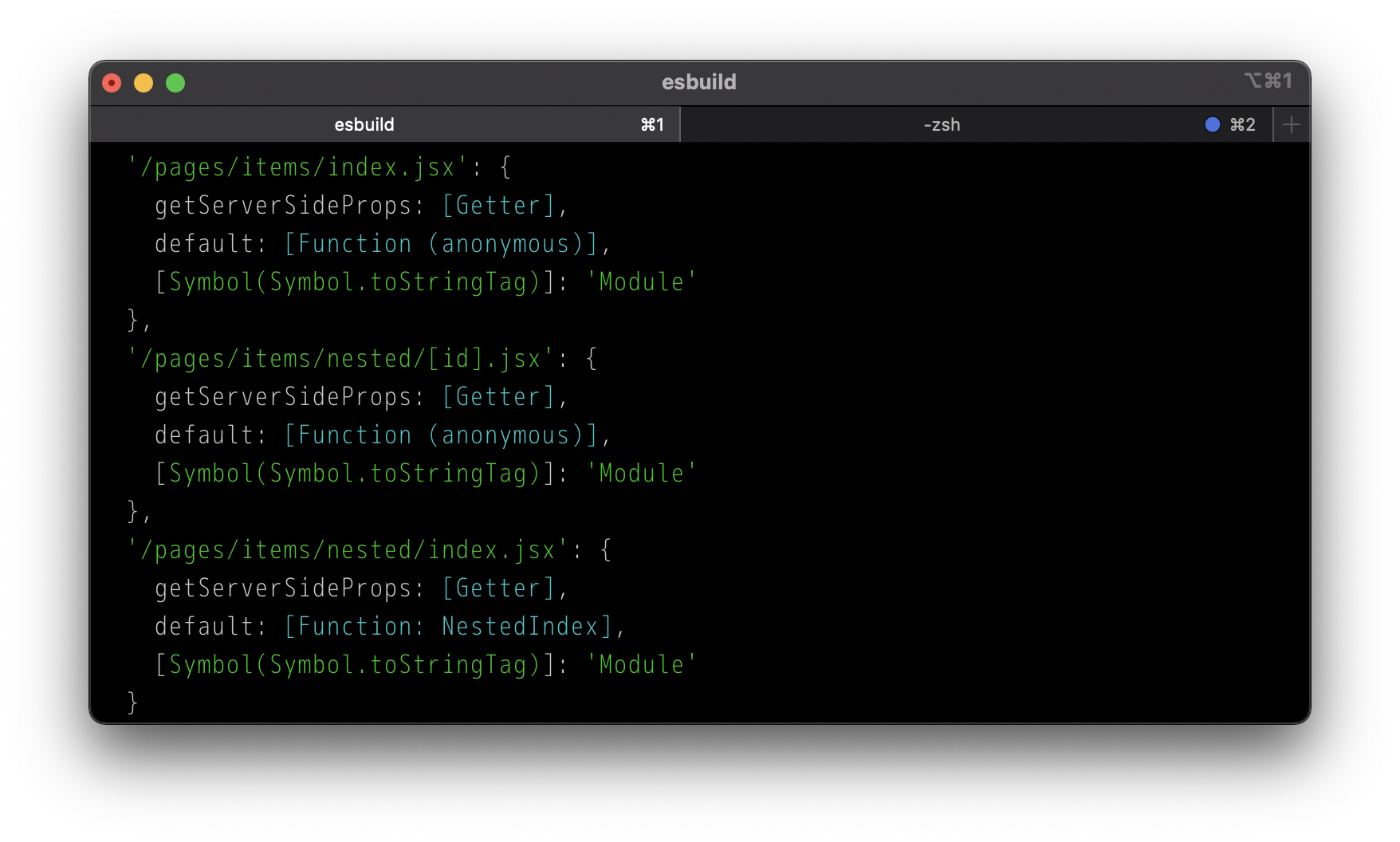

With import.meta.globEager('/pages/**/*.jsx'), we get everything we need:

Now we can work with that. Next I'll create a next.jsx file, no pun intended, to concentrate all of the Next.js functionality, starting with getPageRoutes():

export function getPageRoutes (importMap) {

return Object.keys(importMap)

// Ensure that static routes have

// precedence over the dynamic ones

.sort((a, b) => a > b ? -1 : 1)

.map((path) => ({

path: path

// Remove /pages and .jsx extension

.slice(6, -4)

// Replace [id] with :id

.replace(/\[(\w+)\]/, (_, m) => `:${m}`)

// Replace '/index' with '/'

.replace(/\/index$/, '/'),

// The React component (default export)

component: importMap[path].default,

// The getServerSideProps individual export

getServerSideProps: importMap[path].getServerSideProps,

}))

}

Now we can just import it and use it from routes.js:

import { getPageRoutes } from './next.jsx'

export default getPageRoutes(import.meta.globEager('/pages/**/*.jsx'))

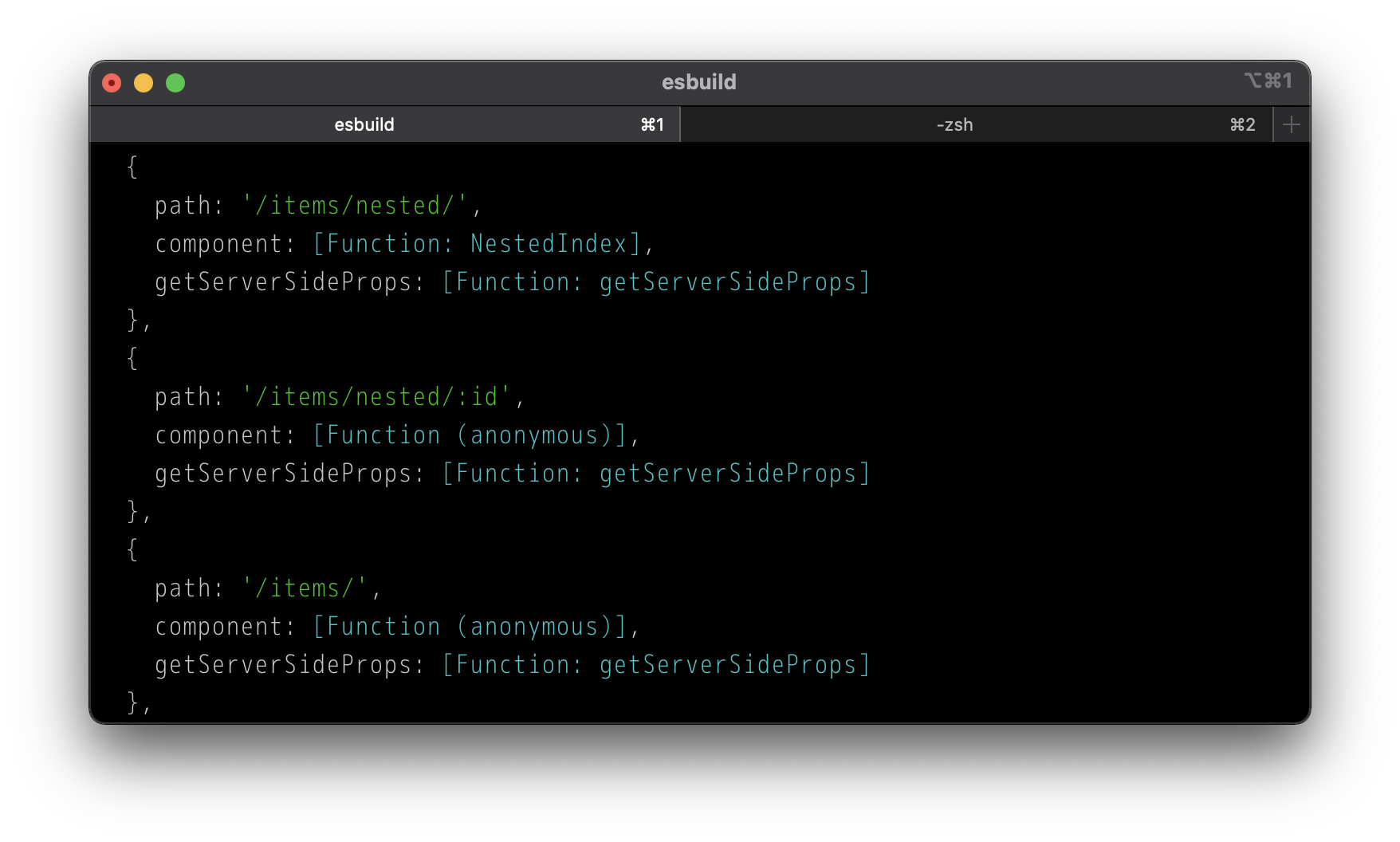

This is what we get from getPageRoutes():

One import detail: to get this routing scheme to work, we must enable ignoreTrailingSlash when creating the Fastify server instance, otherwise requests to items/ will land on the items/:id route handler.

const server = Fastify({ ignoreTrailingSlash: true })

Managing getServerSideProps() loading server-side

So it's time to start leveraging some of fastify-vite's magic. We'll use the createRoute() configuration function, which gets called for each of the routes exported by your Vite client module.

See fastify-vite's' README for more info about this.

We want to register not only a server-side route to render the client-side route that we have, but also an extra /json endpoint based on the same route path, so we can retrieve getServerSideProps() over a JSON request as well when the user is navigating through the application client-side.

But when the user is accessing the route directly, it gets server-side rendered, in which case we also want to execute getServerSideProps() and have its data ready for client-side hydration. In that case we'll just append a preHandler Fastify route hook to the SSR route. Here's what it looks like:

function createRoute ({ handler, errorHandler, route }, scope, config) {

if (route.getServerSideProps) {

// If getServerSideProps is provided, register JSON endpoint for it

scope.get(`/json${route.path}`, async (req, reply) => {

reply.send(await route.getServerSideProps({

req,

ky: scope.ky,

}))

})

}

scope.get(route.path, {

// If getServerSideProps is provided,

// make sure it runs before the SSR route handler

...route.getServerSideProps && {

async preHandler (req, reply) {

req.serverSideProps = await route.getServerSideProps({

req,

ky: scope.ky,

})

}

},

handler,

errorHandler,

...route,

})

}

Notice how ky is also passed to getServerSideProps(). That is a reference to a ky client decorator registered in server.js. I like ky — it's nice little wrapper around fetch(). Of course if you're using Node v17.5+, you could just use the native one.

In this case I'm using ky-universal, because that also works client-side, and even though that's not how I'm using it in this example, it could be the case if were expand it to also have a form that needs to perform POST JSON requests to add more items to the server

todoList.

The createRenderFunction() fastify-vite configuration function is nearly the same as the one from the react-hydration example, the only difference being that we pass serverSideProps instead of data to the SSR context.

Managing getServerSideProps() loading client-side

Now the real fun begins. Let's adapt the client to work with getServerSideProps(), no matter if it's provided through client-side hydration on first-render or if the user is navigating via React Router and a JSON request needs to be made to retrieve it.

First we modify base.jsx to use a PageManager for the routes:

import { PageManager } from './next.jsx'

const Router = import.meta.env.SSR ? StaticRouter : BrowserRouter

export function createApp (ctx, url) {

return (

<Suspense>

<Router location={url}>

<PageManager routes={routes} ctx={ctx} />

</Router>

</Suspense>

)

}

Next, in next.jsx, we create the PageManager component. Its job is to wrap every route component under a Page component that takes the SSR context, if available, and also a flag indicating whether or not the page has getServerSideProps() defined and data fetching needs to occur during client-side navigation as well:

export function PageManager ({ routes, ctx }) {

return (

<Routes>{

routes.map(({ path, component, getServerSideProps }) => {

return <Route key={path} path={path} element={

<Page

ctx={ctx}

path={path}

hasServerSideProps={!!getServerSideProps}

component={component} />

} />

})

}</Routes>

)

}

And here's how we control the rendering of each route component accounting for the possibility of having to retrieve data prior to rendering:

function Page ({

ctx,

hasServerSideProps,

component: Component

}) {

// If running on the server...

// See if we already have serverSideProps populated

// via the registered preHandler hook and passed

// down via the SSR context

if (ctx) {

if (ctx.serverSideProps) {

return <Component {...ctx.serverSideProps} />

} else {

return <Component />

}

}

// If running on the client...

// Retrieve serverSideProps hydration if available

let serverSideProps = window.hydration.serverSideProps

// Ensure hydration is always cleared after the first page render

window.hydration = {}

if (hasServerSideProps) {

// First check if we have serverSideProps hydration

if (serverSideProps) {

return <Component {...serverSideProps} />

}

const { pathname, search } = useLocation()

try {

// If not, fetch serverSideProps from the JSON endpoint

serverSideProps = fetchWithSuspense(`${pathname}${search}`)

return <Component {...serverSideProps} />

} catch (error) {

// If it's an actual error...

if (error instanceof Error) {

return <p>Error: {error.message}</p>

}

// If it's just a promise (suspended state)

throw error

}

}

return <Component />

}

Notice how we attempt to use window.hydration. It should only be available right after first-render (SSR). To ensure the value is cleared before the user navigates to another page, we always reset it on the Page component.

The amazingly obtuse Suspense API

So you're probably wondering about fetchWithSuspense(), and also probably:

// If it's just a promise (suspended state)

throw error

To make our automated page-level getServerSideProps() fetching work client-side, we have to rely on React's Suspense API, which is still experimental. I don't know where to begin, after the time I spent getting this to work, I'm convinced the React community is suffering from some sort of collective Stockholm syndrome. For reasons beyond my understanding, React is still the most popular framework.

I say this tongue-in-cheek, of course. I hope I don't have to list here all the reasons why React has an immense value to the JavaScript ecosystem, and even if I choose not to use it for my projects — I'm frequently inspired by Dan Abramov.

Alas, let's start with the fact the juicier bit of documentation around Suspense can still only be found in React 17's documentation, not React 18 where it matured quite a bit. Then there's fact there's no precise documentation on what the Suspense-flavored loading actually requires, just a walkthrough covering a CodeSandbox example where you're expected to just fill in the gaps with your own understanding. For instance, in one segment, it states:

React tries to render

<ProfileTimeline>. It callsresource.posts.read(). Again, there’s no data yet, so this component also "suspends". React skips over it too, and tries rendering other components in the tree.

Now, let me try and break it down for you the best way I can. React will consider a component suspended if you run a function inside of it that throws a promise. You read that right, it expects you to throw a promise. My spider sense is tingling on the obvious code smell of this pattern, but let's move on. A function that suspends rendering of a React comment will throw a promise, indicating the suspended status. Or an error, indicating failure, or just return the loaded data, in both of which cases the suspended status clears and rendering proceeds.

Now let's take a look at another segment of the same documentation:

If you’re working on a data fetching library, there’s a crucial aspect of Render-as-You-Fetch you don’t want to miss. We kick off fetching before rendering.

// Start fetching early! const resource = fetchProfileData(); // ... function ProfileDetails() { // Try to read user info const user = resource.user.read(); return <h1>{user.name}</h1>; }

Wait, what? What is that resource doing outside of the component code? How am I supposed to control loading flow when I have to create a special resource object outside my component? That's the key thing to undestand here, the function you call inside the component must work against a pre-existing promise, which is recommended to be managed by a special resource object that tracks its state. In short, you're supposed to create a Promise only once, and then just access its state from the component as React is waiting for it to resolve.

Armed with this hard earned knowledge, let's implement fetchWithSuspense(), used by the Page component defined earlier. First we'll create a suspenseMap, where we'll hold references to all active requests. The rest of the rundown follows in the code.

const suspenseMap = new Map()

function fetchWithSuspense (path) {

let loader

// When fetchWithSuspense() is called the first time inside

// a component, it'll create the resource object (loader) for

// tracking its state, but the next time it's called, it'll

// return the same resource object previously saved

if (loader = suspenseMap.get(path)) {

// Handle error, suspended state or return loaded data

if (loader.error || loader.data?.statusCode === 500) {

if (loader.data?.statusCode === 500) {

throw new Error(loader.data.message)

}

throw loader.error

}

if (loader.suspended) {

throw loader.promise

}

// Remove from suspenseMap now that we have data

suspenseMap.delete(path)

return loader.data

} else {

loader = {

suspended: true,

error: null,

data: null,

promise: null,

}

loader.promise = fetch(`/json${path}`)

.then((response) => response.json())

.then((loaderData) => { loader.data = loaderData })

.catch((loaderError) => { loader.error = loaderError })

.finally(() => { loader.suspended = false })

// Save the active suspended state to track it

suspenseMap.set(path, loader)

// Call again for handling tracked state

return fetchWithSuspense(path)

}

}

And that's all we need! Here's what one of the page components looks like:

import React from 'react'

import { Link } from 'react-router-dom'

export async function getServerSideProps ({ req, ky }) {

const todoList = await ky('api/todo-list').json()

return { item: todoList[req.params.id] }

}

export default function NestedItem ({ item }) {

return (

<>

<p>{ item }</p>

<p>

<Link to="/">Go to the index</Link>

</p>

</>

)

}

You can inspect and play with examples/react-next in fastify-vite's repository. Or jump straight to next.jsx to digest all this madness.

Why stop here? Let's do the same for Vue 3 now

Why not bring a bit of Next.js goodness to Vue 3? Nuxt.js already has its own idioms, but perhaps I'm suffering from a bit of the React Stockholm syndrome now because I really want to see this working. Thankfully, in Vue-land things are little less problematic — well, that's an understatement.

I don't know how this eluded React Router's authors, but having navigation guards hooked into the router is a pretty good idea! That means we don't need all that PageManager, Page and Suspense shenanigans. We can just hook into the router directly with the beforeEach() navigation guard and execute a JSON fetch to getServerSideProps() for every client-side route navigation.

But just to be a little fancy about it, let's turn it into a Vue plugin:

export function createPageManager ({

ctx,

router,

routes,

ssr

}) {

return (instance) => {

const globalProperties = instance.config.globalProperties

globalProperties.$error = null

if (ssr) {

// Populate serverSideProps with SSR context data

globalProperties.$serverSideProps = ctx.serverSideProps

} else {

// Populate serverSideProps with hydrated SSR context data if avilable

globalProperties.$serverSideProps = window.hydration.serverSideProps

}

// A way to quickly access getServerSideProps by matched path

const routeMap = Object.fromEntries(

routes.map(({ path, getServerSideProps }) => {

return [path, getServerSideProps]

})

)

// Set up Vue Router hook

if (!import.meta.env.SSR) {

router.beforeEach(async (to) => {

// Ensure hydration is always reset after a page renders

window.hydration = {}

// If getServerSideProps is available...

if (routeMap[to.matched[0].path]) {

await fetch(`/json${to.path}`)

.then((response) => response.json())

.then((data) => {

if (data.statusCode === 500) {

globalProperties.$error = data.message

} else {

globalProperties.$serverSideProps = data

}

})

.catch((error) => {

globalProperties.$error = error

})

}

})

}

}

}

Here's what one of the Vue page components looks like:

<script>

export async function getServerSideProps ({ req, ky }) {

const todoList = await ky('api/todo-list').json()

return { item: todoList[req.params.id] }

}

</script>

<template>

<p>{{ $serverSideProps.item }}</p>

<p>

<router-link to="/">

Go to the index

</router-link>

</p>

</template>

You're not supposed to use

globalProperties. The politically correct way to achieve this is by usingapp.provide(), but we're just having fun here andglobalPropertiesgets us a really short and sweet usage pattern.

That's about the only difference between the Vue and React examples. The getPageRoutes() is roughly the same (just loads vue files instead of jsx), the createRoute() function read exactly the same.

You can inspect and play with examples/vue-next in fastify-vite's repository.

⁂

My goal with this article was to demonstrate fastify-vite's ability to serve as a micro framework for building full stack frameworks.

Back in my Python days, there was a significant breakthrough in how Python web servers were written: WSGI, or, Web Server Gateway Interface. WSGI brought a lot of standardization to how Python applications operated as web servers and generally helped the Python community leap forward with a lot of innovation focused on areas that really mattered instead of the basic mechanics of connecting everything together. With the advent of SSR applications, I always missed a standardized approach for unifying client-side and server-side rendering modes.

I believe fastify-vite brings something close to that to the table. Not a universal, generic standard since it builds heavily on Fastify, so it is opinionated to some extent. But if you're using Fastify, it gives you a toolbox to integrate with virtually any kind of Vite-powered application, with full control over the whole pipeline.

⁂

That being said, the conveniences of a full stack framework that implements the most common and advanced features required by state-of-the-art applications is something desirable. Going with Fastify and Vite alone at this point means you'll have to do a lot of that work by yourself.

I'm working on a new full stack framework based on Fastify: Fastify DX.

zx, a toolbox for running shell scripts and external processes, and tries to provide the minimum amount of glue code for reducing the amount of boilerplate you have to write. Right now Fastify DX is under active development.

It builds upon this newest release of fastify-vite and features more fully-featured built-in renderers for popular client frameworks, as well as many sensible inclusions to any Fastify application. My hope is that it'll be fastify-cli's spiritual successor, reshaped for enhanced developer productivity and SSR tooling.

Subscribe to the newsletter to be one of the first to hear about the public beta!